Interactive Language Learning

Nadav Lidor, Sida I. Wang 12/14/2016

Today, natural language interfaces (NLIs) on computers or phones are often trained once and deployed, and users must just live with their limitations. Allowing users to demonstrate or teach the computer appears to be a central component to enable more natural and usable NLIs. Examining language acquisition research, there is considerable evidence suggesting that human children require interactions to learn language, as opposed to passively absorbing language, such as when watching TV (Kuhl et al., 2003, Sachs et al., 1981). Research suggests that when learning a language, rather than consciously analyzing increasingly complex linguistic structures (e.g. sentence forms, word conjugations), humans advance their linguistic ability through meaningful interactions (Kreshen, 1983).

In contrast, the standard machine learning dataset setting has no interaction. The feedback stays the same and does not depend on the state of the system or the actions taken. We think that interactivity is important, and that an interactive language learning setting will enable adaptive and customizable systems, especially for resource-poor languages and new domains where starting from close to scratch is unavoidable.

We describe two attempts towards interactive language learning — an agent for manipulating blocks, and a calendar scheduler.

Language Games: A Blocks-World Domain to Learn Language Interactively

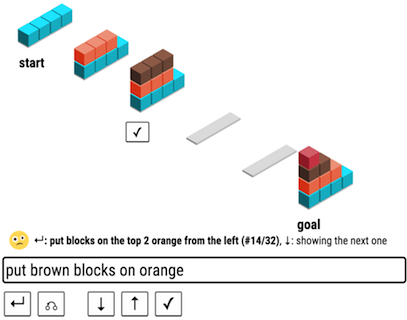

Inspired by the human language acquisition process, we investigated a simple setting where language learning starts from scratch. We explored the idea of language games, where the computer and the human user need to collaboratively accomplish a goal even though they do not initially speak a common language. Specifically, in our pilot we created a game called SHRDLURN, in homage to the seminal work of Terry Winograd. As shown in Figure 1a, the objective is to transform a start state into a goal state, but the only action the human can take is entering an utterance. The computer parses the utterance and produces a ranked list of possible interpretations according to its current model. The human scrolls through the list and chooses the intended one, simultaneously advancing the state of the blocks and providing feedback to the computer. Both the human and the computer wish to reach the goal state (only known to the human) with as little scrolling as possible. For the computer to be successful, it has to learn the human’s language quickly over the course of the game, so that the human can accomplish the goal more efficiently. Conversely, the human can also speed up progress by accommodating to the computer, by at least partially understanding what it can and cannot currently do.

We model the computer as a semantic parser (Zettlemoyer and Collins, 2005; Liang and Potts, 2015), which maps natural language utterances (e.g., ‘remove red’) into logical forms (e.g., remove(with(red))). The semantic parser has no seed lexicon and no annotated logical forms, so it just generates many candidate logical forms. From the human’s feedback, it learn by adjusting the parameters corresponding to simple and generic lexical features. It is crucial that the computer learns quickly, or users are frustrated and the system is less usable. In addition to feature engineering and tuning online learning algorithms, we achieved higher learning speed by incorporating pragmatics.

However, what is special here is the real-time nature of learning, in which the human also learns and adapts to the computer, thus making it easier to achieve good task performance. While the human can teach the computer any language - in our pilot, Mechanical Turk users tried English, Arabic, Polish, and a custom programming language - a good human player will choose to use utterances so that the computer is more likely to learn quickly.

You can find more information in the SHDLURN paper, a demo, code, data, and experiments on CodaLab and the client side code.

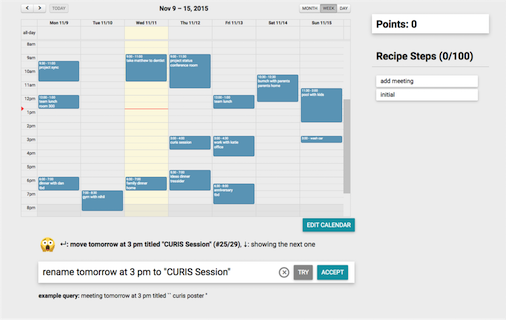

1a SCHRDLURN (top) and 1b SCHEDLURN (bottom)

Figure 1: 1a: A pilot for learning language through user interaction. The system attempts an action in response to a user instruction and the user indicates whether it has chosen correctly. This feedback allows the system to learn word meaning and grammar. 1b: the interface for interactive learning in the calendars domain.

A Calendar Employing Community Learning with Demonstration

Many challenges remain if we want to advance to NLIs for broader domains. First, in order to scale to more open, complex action spaces, we need richer feedback signals that are both natural for humans and useful for the computer. Second, to allow for quick, generalizable data collection, we seek to support collective, rather than individual, languages, in a community-based learning framework. We now outline our first attempt at addressing these challenges and scaling the framework to a calendar setting. You can find a short video overview.

Event scheduling is a common yet unsolved task: while several available calendar programs allow limited natural language input, in our experience they all fail as soon as they are given something slightly complicated, such as ‘Move all the tuesday afternoon appointments back an hour’. We think interactive learning can give us a better NLI for calendars, which has more real world impact than blocks world. Furthermore, aiming to expand our learning methodology from definition to demonstration, we chose this domain as most users are already familiar with the common calendar GUI with an intuition for its manual manipulation. Additionally, as calendar NLIs are already deployed, particularly on mobile, we hoped users will naturally be inclined to use natural language style phrasing rather than a more technical language as we saw in the blocks world domain. Lastly, a calendar is a considerably more complex domain, with a wider set of primitives and possible actions, and will allow us to test our framework with a larger action space.

Learning from Demonstration and Community

In our pilot, user feedback was provided by scrolling and selecting the proper action for a given utterance - a process both unnatural and un-scalable for large action spaces. Feedback signals in human communication include reformulation, paraphrases, repair sequences etc. (Clark, 1996). We expanded our system to receive feedback through demonstration, as it is 1) natural for people, especially using a calendar, allowing for easy data collection, and 2) informative for language learning and can be leveraged by current machine learning methods. In practice, if the correct interpretation is not among the top choices, the system falls back to a GUI and the user uses the GUI to show the system what they meant. Algorithms for learning from denotations are well-suited for this, where the interactivity can potentially help in the search for the latent logical forms.

While learning and adapting to each user provided a clean setting for the pilot study, we would not expect good coverage if each person has to teach the computer everything from scratch. Despite individual variations, there should be much in common across users which allows the computer to learn faster and generalize better. For our calendar, we abandoned the individualized user-specific language model for a collective community model where a model consists of a set of grammar rules and parameters collected across all users and interactions. Each user contributes to the expressiveness and complexity of the language where jargons and conventions are invented, modified, or rejected in a distributed way.

Preliminary Results

Using Amazon Mechanical Turk (AMT), we paid 20 workers 2 dollars each to play with our calendar. In total, out of 356 total utterances, in 196 cases the worker selected a state out of the suggested ranked list as the desired calendar state, and 68 times the worker used the calendar GUI to manually modify and submit feedback by demonstration.

A small subset of commands collected is displayed in figure 2. While a large percentage involved relatively simple commands (Basic), AMT workers did challenge the system for complex tasks using non-trivial phrasing (Advanced). As we hoped, users were highly inclined to use natural language, and did not develop a technical, artificial language. A small number of commands were questionable in nature, with unusual calendar commands (see Questionable).

| Basic | Advanced | Questionable |

|---|---|---|

| move "ideas dinner tressider" to Saturday | change "family room" to "game night" and add location "family room | duplicate all calendar entries |

| cancel "team lunch” Friday between 12 pm and 1 pm | Duplicate the "family dinner" event to 9pm today | remove all appointments for the entire week |

| Change "golf lesson" to 5pm | remove all appointments on monday | Remove all entries |

| Schedule a "meeting with Bob" Tuesday at 10:30am" | change all "team lunch" to after 2 pm |

Figure 2. A categorized sample of commands collected in our experiment

To assess learning performance, we measure the system’s ability to correctly predict the correct calendar action given a natural language command. We see that the top-ranked action is correct about 60% of the time, and the correct meaning is in the top three system-ranked actions about 80% of the time.

Discussion

The key challenge is figuring out which feedback signals are both usable for the computer and natural for humans. We explored providing alternatives and learning from demonstration. We are also trying definitions and rephrasing. For example, when a user rephrases “my meetings tomorrow morning” as “my meetings tomorrow after 7 am and before noon”, we can infer the meaning of “morning".

Looking forward, we believe NLIs must learn through interaction with users, and improve over time. NLIs have the potential to replace GUIs and scripting for many tasks, and doing so can bridge the great digital divide of skills and enable all of us to better make use of computers.