Having broken up our documents (and also our query) into tokens, the easy case is if tokens in the query just match tokens in the token list of the document. However, there are many cases when two character sequences are not quite the same but you would like a match to occur. For instance, if you search for USA, you might hope to also match documents containing U.S.A.

Token normalization is the process of

canonicalizing tokens so that matches occur despite superficial

differences in the character sequences of the tokens.![]() The most standard way to normalize is to implicitly create

equivalence classes , which are

normally named after one member of the set. For instance, if the

tokens anti-discriminatory and antidiscriminatory are both

mapped onto the term antidiscriminatory, in both the document

text and queries, then searches for one term will retrieve

documents that contain either.

The most standard way to normalize is to implicitly create

equivalence classes , which are

normally named after one member of the set. For instance, if the

tokens anti-discriminatory and antidiscriminatory are both

mapped onto the term antidiscriminatory, in both the document

text and queries, then searches for one term will retrieve

documents that contain either.

The advantage of just using mapping rules that remove characters like hyphens is that the equivalence classing to be done is implicit, rather than being fully calculated in advance: the terms that happen to become identical as the result of these rules are the equivalence classes. It is only easy to write rules of this sort that remove characters. Since the equivalence classes are implicit, it is not obvious when you might want to add characters. For instance, it would be hard to know to turn antidiscriminatory into anti-discriminatory.

|

An alternative to creating equivalence classes is to maintain relations between unnormalized tokens. This method can be extended to hand-constructed lists of synonyms such as car and automobile, a topic we discuss further in Chapter 9 . These term relationships can be achieved in two ways. The usual way is to index unnormalized tokens and to maintain a query expansion list of multiple vocabulary entries to consider for a certain query term. A query term is then effectively a disjunction of several postings lists. The alternative is to perform the expansion during index construction. When the document contains automobile, we index it under car as well (and, usually, also vice-versa). Use of either of these methods is considerably less efficient than equivalence classing, as there are more postings to store and merge. The first method adds a query expansion dictionary and requires more processing at query time, while the second method requires more space for storing postings. Traditionally, expanding the space required for the postings lists was seen as more disadvantageous, but with modern storage costs, the increased flexibility that comes from distinct postings lists is appealing.

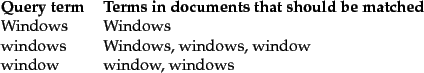

These approaches are more flexible than equivalence classes because the expansion lists can overlap while not being identical. This means there can be an asymmetry in expansion. An example of how such an asymmetry can be exploited is shown in Figure 2.6 : if the user enters windows, we wish to allow matches with the capitalized Windows operating system, but this is not plausible if the user enters window, even though it is plausible for this query to also match lowercase windows.

The best amount of equivalence classing or query expansion to do is a

fairly open

question. Doing some

definitely seems a good idea. But doing a lot can easily have

unexpected consequences of broadening queries in unintended ways. For

instance, equivalence-classing U.S.A. and USA to the

latter by deleting periods from tokens might at first seem very

reasonable, given the prevalent pattern of optional use of periods in

acronyms. However, if I put in as my query term C.A.T., I might

be rather upset if it matches every appearance of the word cat in

documents.![]()

Below we present some of the forms of normalization that are commonly employed and how they are implemented. In many cases they seem helpful, but they can also do harm. In fact, you can worry about many details of equivalence classing, but it often turns out that providing processing is done consistently to the query and to documents, the fine details may not have much aggregate effect on performance.